I've had a front-row seat to something that doesn't happen often in financial services: genuine, measurable transformation driven by a single platform decision. Working with dozens of banks, asset managers, insurers, and fintechs across our portfolio at Blue Orange Digital, I've watched Snowflake evolve from "the data warehouse we migrated to" into something much more consequential, a unified operating layer for analytics, machine learning, and now autonomous AI agents that are starting to reshape how these firms actually run.

This isn't a Snowflake marketing piece. It's a field report from someone who's been in the architecture sessions, the data governance reviews, and the sprint retros where real teams are making real bets on where the value sits. And in 2026, two areas are pulling away from the pack: advanced data analytics and agentic AI. Here's what we're seeing, why it matters, and where the smart money is flowing.

The Foundation That Made Everything Else Possible

Before we get into the headline capabilities, it's worth acknowledging a truth that doesn't get enough attention: the firms seeing the most value from Snowflake's AI and analytics stack are the ones that invested in their data foundation first. Full stop.

At Blue Orange Digital, we've spent years migrating clients off of legacy on-prem data warehouses, Teradata, Netezza, aging Hadoop clusters, onto Snowflake. That work was often positioned as a cost and performance play, and it was. But the second-order effect has turned out to be far more valuable. These organizations now have clean, governed, centralized data sitting in one place with consistent access controls. That's the precondition for everything I'm about to describe.

The firms that skipped the foundation work, that bolted AI onto fragmented data landscapes, are the ones now circling back to us asking why their models are hallucinating on bad data or why their compliance team is blocking every production deployment. If you're reading this and you haven't completed your cloud migration, that's step one. Not step zero… step one.

Data Analytics: Moving from Dashboards to Decision Intelligence

Real-Time Risk Modeling at Scale

The most impactful analytics workload we're deploying on Snowflake right now isn't a dashboard refresh, it's real-time risk computation running natively inside the data platform. Traditional risk models at mid-market banks and regional lenders have long been batch-oriented: run the VaR calculation overnight, hand the report to the desk by 7 AM. That model is dying.

What we're building instead leverages Snowflake's Snowpark for Python to execute risk models directly against live data, eliminating the extract-transform-load overhead that used to add hours of latency. One client, a multi-strategy hedge fund, moved their entire factor risk pipeline into Snowpark, cutting their calculation window from four hours to under twenty minutes. The key technical insight was using Snowflake's elastic compute to spin up dedicated warehouses for the heavy linear algebra, then spin them down immediately after. The cost profile ended up being 40% lower than their previous on-prem grid compute, with dramatically better latency.

For teams evaluating this pattern, the critical design decision is how you handle model versioning and feature store integration within Snowflake versus maintaining a separate ML platform. We've found that for risk models with well-defined feature sets, keeping everything inside Snowflake via Snowpark ML simplifies the deployment pipeline significantly. For more experimental, research-heavy workflows, a hybrid approach with a feature store like Feast or Tecton feeding into Snowflake-hosted models tends to scale better.

Unstructured Data as a First-Class Analytics Asset

Here's a trend that has genuinely surprised me in its pace of adoption: financial services firms are finally treating unstructured data as analytically valuable, and Snowflake's Cortex AISQL functions are the catalyst.

Consider what a typical asset management firm sits on: thousands of earnings call transcripts, sell-side research PDFs, internal investment memos, regulatory filings, news feeds, and client correspondence. Historically, that data lived in SharePoint folders and email archives, effectively invisible to analytics teams. With Cortex AISQL, specifically the AI-powered extraction and transcription functions now in public preview, firms can run structured queries against this content at scale.

We recently deployed a pipeline for a large insurance company that ingests claims documentation (PDFs, scanned forms, adjuster notes) into Snowflake, uses Cortex AISQL to extract structured fields, and then runs anomaly detection models against the extracted data to flag potential fraud. The entire pipeline lives inside Snowflake. No external NLP service, no separate document processing infrastructure. The compliance team loved it because the data never left their governed environment.

For the technical folks: the extraction functions support schema-on-read patterns, which means you don't need to pre-define your target schema before ingestion. You define what you want to extract at query time, which is a massive flexibility gain when you're dealing with heterogeneous document types across lines of business.

Conversational BI and the Democratization of Data Access

Snowflake Intelligence, their conversational business intelligence layer, is moving from novelty to necessity faster than I expected. The use case that keeps coming up in our financial services engagements isn't the data scientist asking complex questions. It's the portfolio manager, the loan officer, or the compliance analyst who needs an answer from data but has never written a SQL query.

At one private equity firm we work with, the deal team was spending three to four hours per week waiting for the data team to pull portfolio company performance metrics. We deployed Snowflake Intelligence on top of their existing Snowflake warehouse with a well-defined semantic model, and within two weeks the deal team was self-serving roughly 70% of their data requests through natural language. The data team didn't lose headcount, they reallocated those hours to building the next generation of portfolio analytics.

The technical prerequisite that makes or breaks this: semantic model quality. Snowflake Intelligence is only as good as the Cortex Analyst semantic definitions you give it. Garbage in, garbage out. We spend significant time with clients defining clean, well-documented semantic layers before turning on the conversational interface. That upfront investment pays for itself ten times over.

Agentic AI: The Shift from "Ask the Data" to "The Data Acts"

If the analytics story is about making data more accessible and computationally powerful, the agentic AI story is about something fundamentally different: making data operational. And this is where 2026 is getting genuinely interesting.

Snowflake Cortex AI for Financial Services: Why It Matters

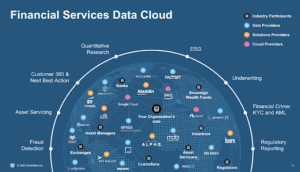

In October 2025, Snowflake launched Cortex AI for Financial Services, their first industry-specific AI suite, built in partnership with Anthropic, FactSet, MSCI, and Salesforce. This wasn't a rebrand of existing features. It was a deliberate architecture decision to bring AI capabilities inside the governance perimeter that financial services requires.

The significance for our clients has been immediate. The single biggest blocker we've encountered in deploying AI in regulated environments is the data residency and governance question: "Where does the data go when it hits the model?" With Cortex AI, the answer is nowhere. The models run inside Snowflake. The data never leaves the governed environment. That single architectural property has unblocked more production AI deployments in the last six months than any model improvement or prompt engineering technique.

The MCP Server: Universal Connectivity for AI Agents

The capability that has generated the most excitement in our technical conversations is the Snowflake-managed Model Context Protocol (MCP) Server, now in public preview. MCP is becoming the standard protocol for connecting AI agents to enterprise data, and Snowflake's managed implementation is a significant accelerator.

Here's the practical impact: before MCP, connecting an AI agent, whether built on Anthropic's Claude, Salesforce's Agentforce, UiPath, or CrewAI, to your Snowflake data required custom integration work. API wrappers, authentication plumbing, query translation layers, access control mapping. For every agent platform you wanted to connect, you were looking at weeks of engineering.

With the managed MCP Server, you configure it once. Your Snowflake data, governed by your existing Role-Based Access Control policies, becomes available to any MCP-compatible agent platform. The agent can query structured data via Cortex Analyst and unstructured data via Cortex Search, all through a single, standardized interface.

We've been prototyping this with a few clients and the pattern that keeps emerging is what I'd call the "multi-agent research desk." Imagine a compliance analyst who needs to investigate a flagged transaction. Instead of manually pulling transaction data, checking it against customer profiles, cross-referencing against regulatory watch lists, and searching internal communications, a workflow that might take two to three hours, an orchestrated set of agents does it in minutes. One agent queries the structured transaction data. Another searches unstructured communications via Cortex Search. A third pulls in external context from FactSet or MSCI data available through Cortex Knowledge Extensions. The analyst gets a synthesized brief with citations and can make a decision.

That's not a lab demo. We're building variations of this right now.

The Data Science Agent: Accelerating Model Development

For the quantitative teams at our financial services clients, the data scientists building risk models, fraud detection systems, and customer propensity scores, the Snowflake Data Science Agent is solving a problem they've complained about for years: the time tax of data preparation.

The Data Science Agent functions as an AI coding assistant that automates data cleaning, feature engineering, model prototyping, and validation. In practice, this means a data scientist working on a credit scoring model can describe their target variable and candidate features in natural language, and the agent generates the preprocessing code, handles missing value imputation, creates derived features, and builds an initial model, all within Snowflake.

We've seen this cut the "data wrangling" phase of model development from roughly 60% of a data scientist's time to under 20%. That's not a small efficiency gain, it's a structural shift in how quantitative teams operate. The scientists spend more time on model architecture decisions, feature selection, and business interpretation, and less time writing pandas transformations and debugging data type mismatches.

A word of caution from our experience: the Data Science Agent is excellent for prototyping and initial exploration, but production model code still needs human review, particularly for models that will be used in regulated decision-making (lending, insurance underwriting, trading). We treat the agent's output as a strong first draft that accelerates the workflow, not as a finished artifact.

Cortex Knowledge Extensions: Enriching AI with Third-Party Intelligence

One of the quieter but high-impact capabilities in the Cortex AI for Financial Services suite is Knowledge Extensions, which are now generally available. These allow financial institutions to tap into premium unstructured data, from publishers like CB Insights, FactSet, Investopedia, The Associated Press, and The Washington Post, directly within their Snowflake environment.

The use case that keeps surfacing in our client conversations: enriching internal analytics with external market context. A portfolio manager's internal data tells them what positions they hold and what their P&L looks like. Knowledge Extensions let an AI agent pull in real-time market commentary, earnings analysis, and macroeconomic research to contextualize that data. The agent doesn't just tell you your energy sector exposure is up 12%, it tells you that recent OPEC+ production decisions and pipeline capacity constraints are creating tailwinds that align with (or contradict) your current positioning.

For firms that have been paying for these data feeds separately and running their own ingestion pipelines, the governance and simplification benefits alone justify the switch.

Where the Real Value Is Converging

The most interesting pattern I'm watching across our client base isn't analytics or agentics in isolation, it's their convergence. The firms generating the most value are the ones building feedback loops between their analytical systems and their agentic workflows.

Here's what that looks like in practice: an analytics pipeline identifies an anomaly, say, an unusual concentration of late payments in a specific geographic region for a consumer lender. That anomaly triggers an agentic workflow that pulls in census data, local economic indicators, competitor pricing data, and internal underwriting policy documents. The agent synthesizes a brief for the credit risk team that includes not just the "what" but the "why" and a set of recommended policy adjustments. The credit risk team reviews, approves, and the adjusted parameters flow back into the analytical models.

That loop, detect, investigate, recommend, act, measure, is what separates organizations using Snowflake as a warehouse from organizations using Snowflake as an intelligence platform. And in financial services, where the cost of a slow decision can be measured in basis points or regulatory penalties, the speed of that loop is a genuine competitive advantage.

What We're Advising Clients to Do Right Now

Based on what we're seeing across dozens of engagements, here's where we're pointing financial services firms in 2026:

- Solidify your data foundation. If your Snowflake environment still has data quality gaps, inconsistent governance, or incomplete migration from legacy systems, fix that before chasing agentic AI. The value of every AI capability is bounded by the quality of the data it operates on.

- Invest in your semantic layer. Cortex Analyst, Snowflake Intelligence, and the MCP Server all depend on well-defined semantic models. Treat your semantic layer as a product, not a byproduct. Staff it, version it, and iterate on it.

- Start with a single agentic use case that has clear ROI. Don't try to build a universal agent framework. Pick a specific workflow, compliance investigation, deal sourcing research, claims triage, and build an end-to-end agentic solution. Prove the value, then expand.

- Pay attention to the MCP ecosystem. The Model Context Protocol is rapidly becoming the standard for agent-to-data connectivity. The firms that adopt it early will have a structural advantage as agent platforms mature and interoperability becomes expected.

- Engage a partner who's done this before. The intersection of financial services regulation, cloud data architecture, and AI engineering is a narrow specialization. At Blue Orange Digital, this is precisely where we live, we've been building Snowflake-native data platforms and AI solutions for financial institutions since before "agentic AI" was a term anyone used. That depth matters when you're deploying models that touch regulated data.

The financial services industry has spent the better part of a decade talking about data-driven transformation. In 2026, the firms running on Snowflake are finally closing the gap between aspiration and execution. The combination of mature data analytics capabilities and a rapidly emerging agentic AI stack is creating opportunities that didn't exist even twelve months ago. The question isn't whether to invest, it's how quickly you can move.

If your team is evaluating how to leverage Snowflake's data analytics or agentic AI capabilities, or if you're looking to accelerate a migration that's already in progress, reach out to Blue Orange Digital. We'd welcome the conversation.